There’s a major problem holding back industrial digitalization. Traditional analytics and data management are inadequate. Only data fusion and contextualization hold the solution.

What’s wrong with traditional data management?

Traditional centralized master data management (MDM) is failing to deliver the modern data-driven operations agility that industrial digitalization calls for. It also cannot provide the level of data quality subjectivity to each use case that is required: delivered through versioning of data specific to the needs of each use case, rather than horizontally across the data itself. Another challenge for horizontal data quality tools and techniques is that they are unable to deal with the breadth, variation, and — frankly — noise of operational industry and machine data.

Industrial machine data offers heavy-asset companies a huge and growing opportunity to extract insights and inform performance-improving actions. To exploit this exponential phenomenon to the full, the industry needs to combine machine learning with scalable, agile SME enablement. It also needs to address high complexity high value use cases with scarce professional data science resources.

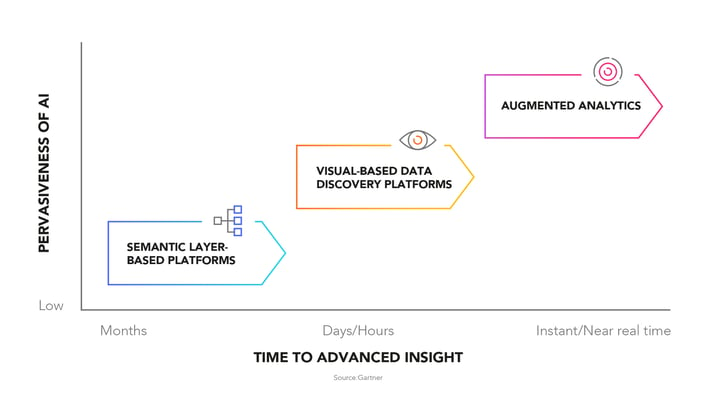

Disruption points in the analytics and business intelligence (BI) market

Looking at the analytics and BI landscape, we can see three main categories of platforms, with varying levels of pervasiveness of AI as well as time-to-insights for those companies who adopt them.

1. Semantic layer-based platforms

These platforms generally work in an IT-led, descriptive manner, with predefined interactivity and KPIs. They are examples of IT modeled, traditional data integration, relying on summary data and a data warehouse. The data, relationships, and questions involved are defined.

The time to achieve basic insights ranges in months, but the low pervasiveness of AI means these insights are of limited sophistication.

2. Visual-based data discovery platforms

These platforms are more business-led, descriptive and diagnostic in their approach, They can accommodate free-form user interactivity, and provide the best visualization. Another key feature is tool-specific self-service data preparation. The data they work with can be structured, personal, and unmodeled. In common with their semantic layer-based counterparts, they require data, relationships, and questions to be defined.

The time to achieve insights is faster, ranging in the hours and days.

3. Augmented analytics

Finally, we come to the most advanced set of platforms. These are led by machine learning, and can be described as pervasive, auto-descriptive, diagnostic, predictive, and prescriptive. They offer the most significant levels of insight to inform user actions. Relevant patterns are auto-visualized, and conversational analytics are used to ask and answer questions, through natural language processing (NLP), natural language query (NLQ), and natural language generation (NLG).

The system offers suggestions in user context or embedded in apps. Crucially, these platforms can also auto-discover new relevant data sources, and handle open data, relationships, and questions.

The time to achieve insights is almost instantaneous and in real time. Augmented analytics platforms are, unsurprisingly, popular in the market.

-png.png?width=717&name=Main%20point%20augmented%20analytics%20(1)-png.png)

Collective experiences and democratization of AI are set to change data management

Data management is moving swiftly from a niche pursuit and the preserve of specialists, to a more widely accessible and actionable set of tools, capabilities, and approaches. This democratization of AI and data management is already changing the game.

AI enables connections to be made and mapped out across a broad, complex, and disparate data landscape, fusing together different data points and bringing forth data contextualization. The collaboration between artificial and human intelligence, with each contributing according to its strengths, has the potential to rebalance and improve the work done by humans and machines.

There is a mass migration towards both crowd-sourced and cloud-sourced tooling, reusable models and techniques, which can offer greater efficiency, flexibility and agility, freeing up time and resources. The overall trend is towards democratization of data management, enabling wider teams to perform more data management tasks, and operationalizing data to satisfy the needs of diverse data consumers.

Data and analytics governance needs new foundations

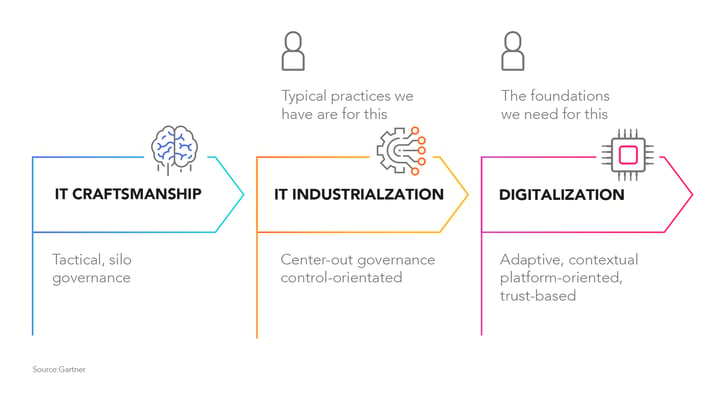

IT has evolved through three major stages, from IT craftsmanship, to IT industrialization, and now digitalization. But many organizations are trying to build digitalization on governance foundations more suited to the previous phase of IT industrialization.

IT craftsmanship was marked by sporadic automation and innovation, with frequent issues affecting the outputs and outcomes. Engagement with the wider business was low, with isolation and disengagement both internally and externally. Governance was tactical, siloed, and technology-centric.

IT industrialization moved beyond this, to emphasize services and solutions, efficiency and effectiveness. Business engagement improved and became less isolated, but was directive, compliance-driven, and risk-averse. Governance was less tactical, more center-out, control-oriented, and process-centric.

These foundations are typically still seen in most heavy-asset companies, despite ever-increasing efforts to implement proper digitalization. This third evolutionary phase calls for another step change in approach. Outcomes and outputs need to focus on business and operating model transformation. Engagement with the wider business must be dynamic and collaborative; risk-aware but not risk-averse.

Governance for digitalization has to be adaptive, contextual, platform-oriented, and trust-based. Instead of focusing on technology or processes per se, we need to put the product, people, and outcomes at the very center.

A new way: data fusion and contextualization

To achieve the full rewards of true digitalization, heavy-asset companies must not only embrace new technologies, but also new approaches, leaving behind the traditional assumptions of data and analytics management and governance.

Cognite combines a powerful blend of machine learning, rules engine, and subject matter expertise to convert data into actionable knowledge.